Supercharge your knowledge base with Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) is a powerful AI architecture that combines the capabilities of large language models (LLMs) with your organization's proprietary data. RAG systems retrieve relevant information from your knowledge base and use it to augment the generation process of LLMs, resulting in more accurate, contextual, and reliable AI outputs.

At BoDuo, we specialize in implementing custom RAG systems that help businesses leverage their existing data to create powerful AI applications. Our RAG implementations can transform how your organization manages knowledge, supports customers, analyzes data, and makes decisions.

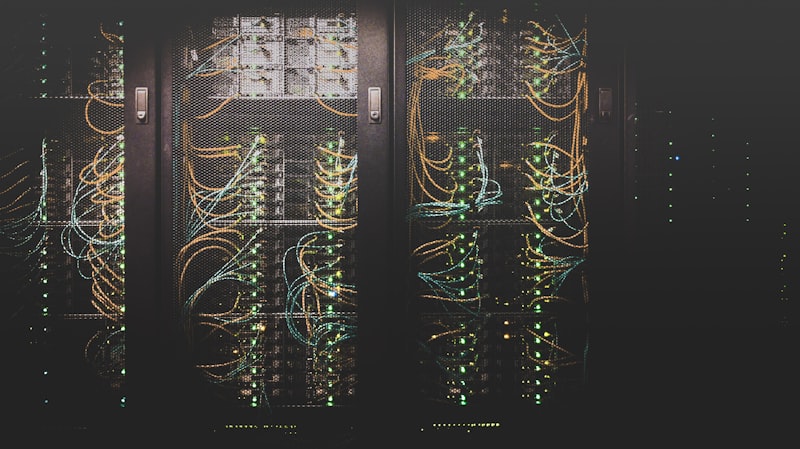

The architecture behind Retrieval Augmented Generation

Your organization's documents, data, and information are processed and indexed for efficient retrieval.

When a query is received, the system retrieves the most relevant information from your knowledge base.

The retrieved information is used to augment the context provided to the large language model.

The LLM generates a response based on both its training and the specific context from your knowledge base.

User feedback helps improve the system over time, refining retrieval accuracy and response quality.

Why businesses are implementing RAG for their AI applications

RAG systems provide more accurate responses by grounding LLM outputs in your specific data, reducing hallucinations and factual errors.

Learn moreKeep your proprietary information secure by using your own data rather than sending sensitive information to external LLM providers.

Learn moreRAG systems can access your most recent data, overcoming the knowledge cutoff limitations of pre-trained LLMs.

Learn moreLeverage your organization's specialized knowledge to create AI applications with deep expertise in your specific domain.

Learn moreReduce the costs associated with fine-tuning large models by using RAG to adapt general-purpose LLMs to your specific needs.

Learn moreRAG systems can scale with your data, allowing you to continuously improve performance as you add more information to your knowledge base.

Learn moreHow organizations are leveraging RAG technology

Create intelligent support chatbots that can access your product documentation, knowledge base, and support history to provide accurate, contextual responses.

Learn more

Transform how your organization accesses and utilizes internal knowledge with intelligent search and retrieval systems.

Learn more

Automatically extract insights, summarize content, and answer questions about large document collections like contracts or research papers.

Learn more

Create interactive learning experiences that leverage your training materials to provide personalized guidance and answer questions.

Learn more

Help healthcare providers quickly access relevant patient information, medical literature, and treatment guidelines.

Learn more

Enable legal professionals to efficiently search through case law, statutes, and legal documents to find relevant precedents.

Learn moreHow we build custom RAG systems for your organization

We evaluate your existing data sources, knowledge bases, and information architecture.

We organize, clean, and structure your data for optimal retrieval and processing.

We implement and configure the vector database for efficient semantic search.

We develop the component that identifies and fetches relevant information.

We integrate the appropriate large language model with effective prompt engineering.

We rigorously test with real-world queries and optimize based on feedback.

We deploy the system and integrate it with your existing applications.

We provide comprehensive training and ongoing support for your team.

Best-in-class tools and frameworks for powerful RAG systems

Specialized databases optimized for storing and querying high-dimensional vector embeddings with lightning-fast similarity search capabilities.

How our RAG implementations have transformed businesses

Reduced medical research time by 75% and improved diagnostic accuracy by 40% for a major hospital network.

Read Case Study

Accelerated case preparation by 60% and increased billable efficiency by 35% for a top law firm.

Read Case Study

Decreased response time by 80% and improved customer satisfaction scores by 45% for a SaaS company.

Read Case StudyCommon questions about RAG systems

While fine-tuning modifies the weights of an LLM to adapt it to specific tasks or domains, RAG keeps the LLM unchanged but augments its inputs with relevant retrieved information. RAG is generally more flexible, cost-effective, and easier to update than fine-tuning, as you can simply update your knowledge base without retraining the model. RAG also tends to produce more factually accurate responses for domain-specific questions since it's directly referencing your data.

RAG (Retrieval-Augmented Generation) is an AI architecture that combines information retrieval with text generation. It works by first searching through your knowledge base to find relevant information, then using that context to generate accurate, informed responses. This approach ensures that AI responses are grounded in your actual data rather than relying solely on the model's training data, resulting in more accurate and up-to-date information.

Implementation time varies based on the complexity of your data and requirements. A basic RAG system can be deployed in 4-6 weeks, while enterprise-level implementations with complex integrations typically take 8-12 weeks. We follow an agile approach, delivering working prototypes early so you can start testing and providing feedback throughout the development process.

RAG systems can process virtually any text-based content including PDFs, Word documents, web pages, databases, APIs, emails, and structured data formats like JSON and XML. We also support multimedia content through specialized processing pipelines that can extract text from images, transcribe audio, and process video content. Our systems can integrate with existing databases, content management systems, and cloud storage platforms.

RAG systems typically achieve 85-95% accuracy compared to 60-70% for traditional rule-based chatbots. This improvement comes from grounding responses in your actual knowledge base rather than relying on pre-programmed responses. RAG systems also provide source citations, allowing users to verify information and building trust in the system's responses.

RAG systems require minimal ongoing maintenance once deployed. Main tasks include periodic updates to the knowledge base (which can be automated), monitoring system performance, and occasional fine-tuning based on user feedback. We provide comprehensive monitoring dashboards and can set up automated data ingestion pipelines to keep your knowledge base current with minimal manual intervention.

Contact our team to discuss how our RAG system implementation services can transform how your organization leverages its data.